Creating an accurate cost model to manage, measure, and bill IT services is a crucial step in organizational maturity. But it doesn’t come without challenges. Thankfully, a little strategy and forethought is all it takes to avoid getting sidetracked by the common issues most, if not all, cost models face.

Our recent eBook – A Practitioner’s Guide to Cost Modeling – outlines the three biggest, most common challenges you should be aware of, as well as how you should plan to handle them.

You can see all the details in the excerpt included below, and you can download a full copy of the eBook instantly by clicking here.

Data issues are one of the biggest barriers to any new cost modeling initiative. You will inevitably discover gaps in data availability and quality that limit the amount of accuracy you can achieve.

There’s almost always enough good data to begin a cost modeling initiative. The important thing is to take the first step; don’t let data issues scare you away.

Start small, limit your model’s scope, and let your core objectives guide the way.

Pick a small set of services to begin costing; limit your model to a select few applications or consumers; and focus on a predetermined set of questions you want to answer.

There’s nothing wrong with using proxy drivers and alternate methods to get your model off the ground. And once you have some outputs to work with, you can start iterating to address problematic data over time.

This approach helps you get a few small wins under your belt to prove the value of your initiative, while building momentum and excitement to move forward.

It can be tempting to use “directionally correct” assumptions based on your model’s outputs, especially if you’re in the process of overcoming problematic data. In other words, this means you’re delivering insights that are true from a broad perspective but that don’t have 100% accuracy.

It can be okay to use “directionally correct” assumptions for certain types of decision-making, but they won’t hold up for use cases like chargeback or showback where stakeholders won’t tolerate anything less than complete accuracy.

You don’t have to write off “directionally correct” assumptions entirely. You won’t always be able to achieve 100% accuracy, and that’s okay.

The key is understanding when “directionally correct” can give “good enough” insight to guide a decision. Acting today on 75% accuracy is often better than taking the same action six months down the road at 90% accuracy.

So how do you make that call? The answer is to work together with the business and be honest about the “directionally correct” accuracy of your insights.

Educate stakeholders on your model, its assumptions, and the conclusions you’re drawing from the outputs. Don’t hold anything back. Translate everything into terms stakeholders understand and pursue better decision-making together.

Every force in your universe is pushing to increase the number of services in your catalog – making your model more difficult to manage and driving up overall complexity.

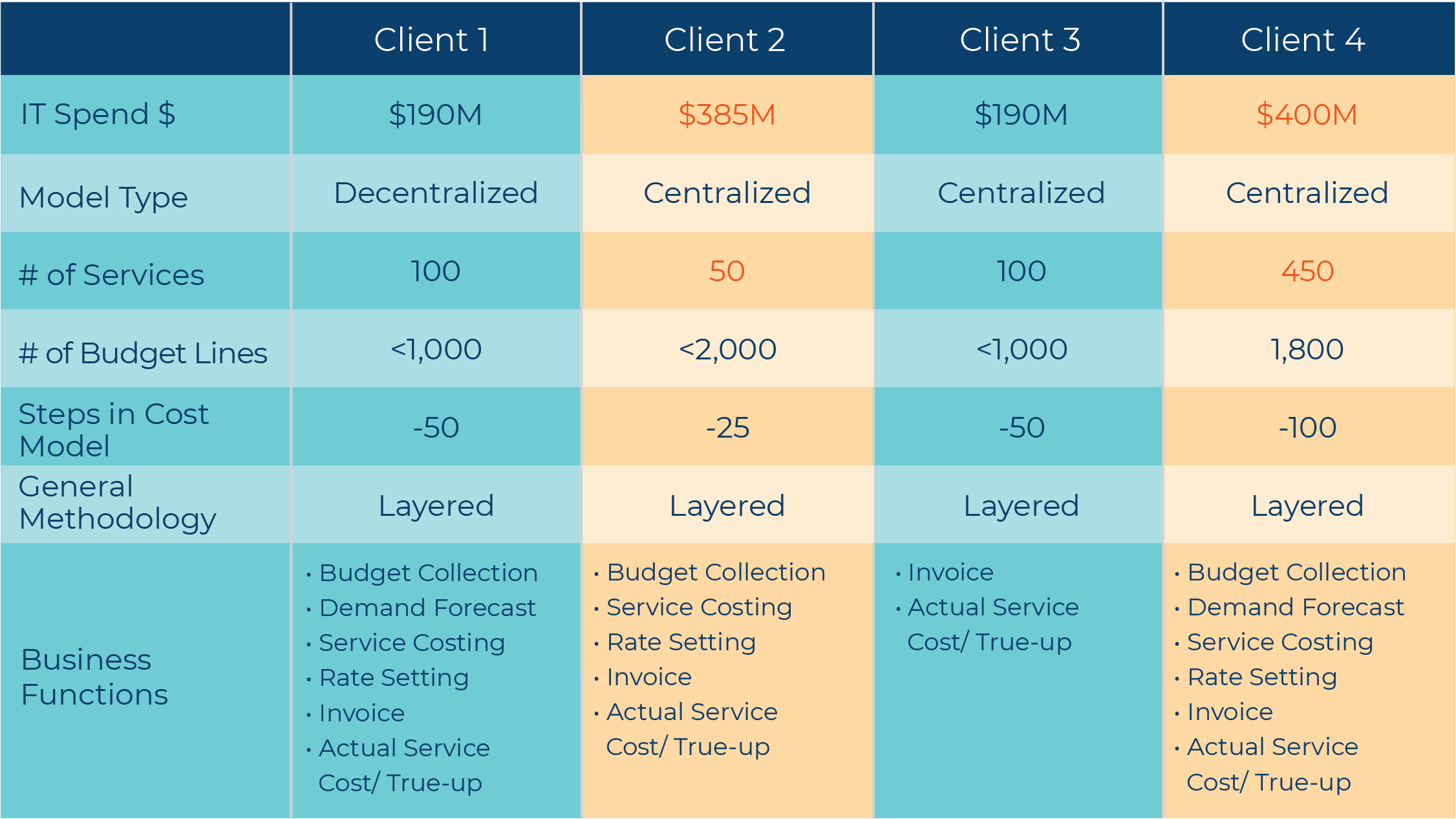

To illustrate further, the chart below shows four Nicus clients, their budget sizes, and some of the high-level details of their cost modeling programs:

Take a close look at clients 2 and 4. Does anything look strange?

Notice how both clients are similar in almost every way – except for their service catalog size. How can two businesses be pursuing the same goals, with the same amount of spend, and using the same type of model, yet end up with massively different service catalogs?

The answer is runaway complexity.

Most stakeholders will “demand” a greater level of detail and granularity in the service catalog. But they don’t always have good reasons for doing so. And unless IT is prepared to answer those demands with fact-based responses, complexity can and will explode out of control.

Managing complexity in your model and service catalog doesn’t have to be difficult. Follow these simple guidelines to avoid runaway complexity:

Interested in learning more about how you can utilize cost modeling within your organization? We’ve assembled an eBook that explains all the fundamentals you need to get started – A Practitioner’s Guide to Cost Modeling.

Click here to get your copy of the full eBook delivered instantly.